“Tell me," Wittgenstein's asked a friend, "why do people always say, it was natural for man to assume that the sun went round the earth rather than that the earth was rotating?" His friend replied, "Well, obviously because it just looks as though the Sun is going around the Earth." Wittgenstein replied, "Well, what would it have looked like if it had looked as though the Earth was rotating?”

Hello, I am Pulkit Malhotra, a student at the University of California San Diego, currently pursuing a major in Physics and Philosophy. My primary interest is in an area where the two fields overlap, where you have to use the concepts in one field to analyze the basics in another to get forward. The reason I took Philosophy and Physics as my major is that they both are similar subjects once you get down to the elementary level - they both rely on studying the observer as much as the thing observed. It seems weird at first considering we always have been taught that physics is a mathematical based experimental field where one theorizes, then experiments, then confirms. Whereas Philosophy is a field that requires ‘abstract thought’, we all have heard the apocryphal stories of Socrates sitting in one spot the entire day lost in thought thinking about the ‘heavens’. But after a point, they too become similar. Let me tell you what we deal with in each subject and you can make the call for yourself.

In philosophy, the main question is can man know/do anything objectively? Is there any knowledge independent of humans or is the knowledge we all share is just because we all are humans, we have some common features. In physics after quantum mechanics we ask is the particle moving objectively or is it just moving like this because there is an observer in the picture? Are all particles entangled with each other so their inherent information is decided from the time of their existence or their properties change due to the observation?

Sounds pretty similar to me.

To summarize, as my friend once joked, to study the combination of physics and philosophy is an interesting one, you say that the particles move like this but at the same time, you ask why does it move like this?

My university research lies at the heart of particle physics, the LHC. I was fortunate enough to meet a professor who works at the ATLAS experiment at CERN, in the data analytics team. In this project, we take an established field (particle physics) and try to study it using an upcoming field (machine learning).

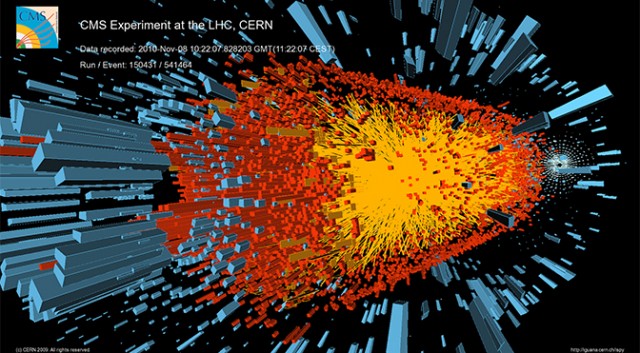

When two particles collide in the LHC, there are millions and millions of subatomic particles given out. The way they are detected is by them having different sensors check different properties like calorimeters that check the energy that has been given out, or by checking the radiation. When a charged particle travels faster than light does through a given medium, it emits Cherenkov radiation at an angle that depends on its velocity. The particle's velocity can be calculated from this angle. Velocity can then be combined with a measure of the particle's momentum to determine its mass, and therefore its identity. When a fast charged particle crosses the boundary between two electrical insulators with different resistances to electric currents, it emits transition radiation. The phenomenon is related to the energy of the particle and so can distinguish different particle types. Taking all these clues from different parts of the detector, physicists build up a snapshot of what was in the detector at the moment of a collision. The next step is to search the collisions for unusual particles, or for results that do not fit current theories. This is how the Higgs Boson was discovered.

This method, though great, has its limitations. What if the particle has no charge and doesn’t interact? Or, like the God particle, actually has a minute half-life and then breaks up into some other common particles?

The CERN Data Center stores more than 30 petabytes of data per year from the LHC experiments, enough to fill about 1.2 million Blu-ray discs, i.e. 250 years of HD video. Over 100 petabytes of data are permanently archived, on tape. Hence this can’t be a human-centric search. Hence the question becomes then What to record and what to leave?

The volume of data produced at the Large Hadron Collider (LHC) presents a considerable processing challenge. Particles collide at high energies inside CERN's detectors, creating new particles that decay in complex ways as they move through layers of sub-detectors. The sub-detectors register each particle's passage and microprocessors convert the particles' paths and energies into electrical signals, combining the information to create a digital summary of the "collision event". The raw data per event is around one million bytes (1 Mb), produced at a rate of about 600 million events per second.

The data flow from all four experiments for Run 2 is anticipated to be about 25 GB/s (gigabyte per second)

ALICE: 4 GB/s (Pb-Pb running)

ATLAS: 800 MB/s – 1 GB/s

CMS: 600 MB/s

LHCb: 750 MB/s

The Worldwide LHC Computing Grid tackles this mountain of data in a two-stage process. First, it runs dedicated algorithms written by physicists to reduce the number of events and select those considered interesting. Analysis can focus on the most important data - that which could bring new physics measurements or discoveries.

In the first stage of the selection called the trigger state (as in what will trigger the detector), the number of events is filtered from the 600 million or so per second picked up by detectors to 100,000 per second sent for digital reconstruction. In a second stage, more specialized algorithms further process the data, leaving only 100 or 200 events of interest per second. This raw data is recorded onto servers at the CERN Data Center at a rate of around 1.5 CDs per second (approximately 1050 megabytes per second). Physicists belonging to worldwide collaborations work continuously to improve detector-calibration methods and to refine processing algorithms to detect ever more interesting events.

Our team works in the trigger state. Our team designs differently machine learning algorithms to be put in the first stage of detection. As this process can’t be done up humans but machines are fast, we can develop different types of algorithms to filter the data out quickly at the trigger. Improvements in the field of machine learning in the past few years have made this process more streamlined and quicker, we are sensitized to more types of data and how they make through our detectors. What makes machine learning easier on the LHC is the data set we have to test the algorithms is already huge and hence the program can be trained accurately.

The field is still in the nascent stages but that doesn’t stop the work from being exciting and as Feynman once said “No problem is too small or too trivial if we can really do something about it.”

.svg)

.png)